Fragmented copy-paste across tools

Developers switch between PRDs, test plans, and trackers to move the same details.

Test planning is the hidden bottleneck of quality assurance: repetitive, manual, and disconnected from developer flow. I designed the Progressive Assistance Model (PAM), a framework for contextual AI that amplifies developer intent instead of replacing it. The redesign cut planning time nearly in half and made trust in automation measurable.

All visuals and product details are fully anonymized and recreated to respect confidentiality while accurately reflecting the challenges, design process, and impact of my work.

Developers spent ~20% of planning time searching for context and re-entering duplicate test data.

The experience broke cognitive flow and eroded confidence in tooling.

“I copy test steps from past cases every time.” — Developer pain point

This fragmented process creates recurring issues:

From stakeholder conversations and workflow analysis, key themes emerged. I aligned each pain point with supporting research insights and proposed design goals to turn them into actionable solutions.

Fragmented copy-paste across tools

Developers switch between PRDs, test plans, and trackers to move the same details.

“Most planning time is spent reformatting and pasting from other docs.”

AI in the flow of work

Surface AI help directly in context. No extra tabs.

Review bottlenecks hide issues

One-by-one reviews slow releases and let problems slip through.

“Reviewing 100+ cases manually is unrealistic. We skim and miss things.”

Faster reviews

Batch actions and previews make large reviews quick and manageable.

Speed vs. oversight trade-off

Teams want speed but also need visibility and trust in results.

“Automation helps, but I need to see what changed and why.”

Keep developers in control

All suggestions stay editable, reviewable, and rejectable. AI proposes, humans decide.

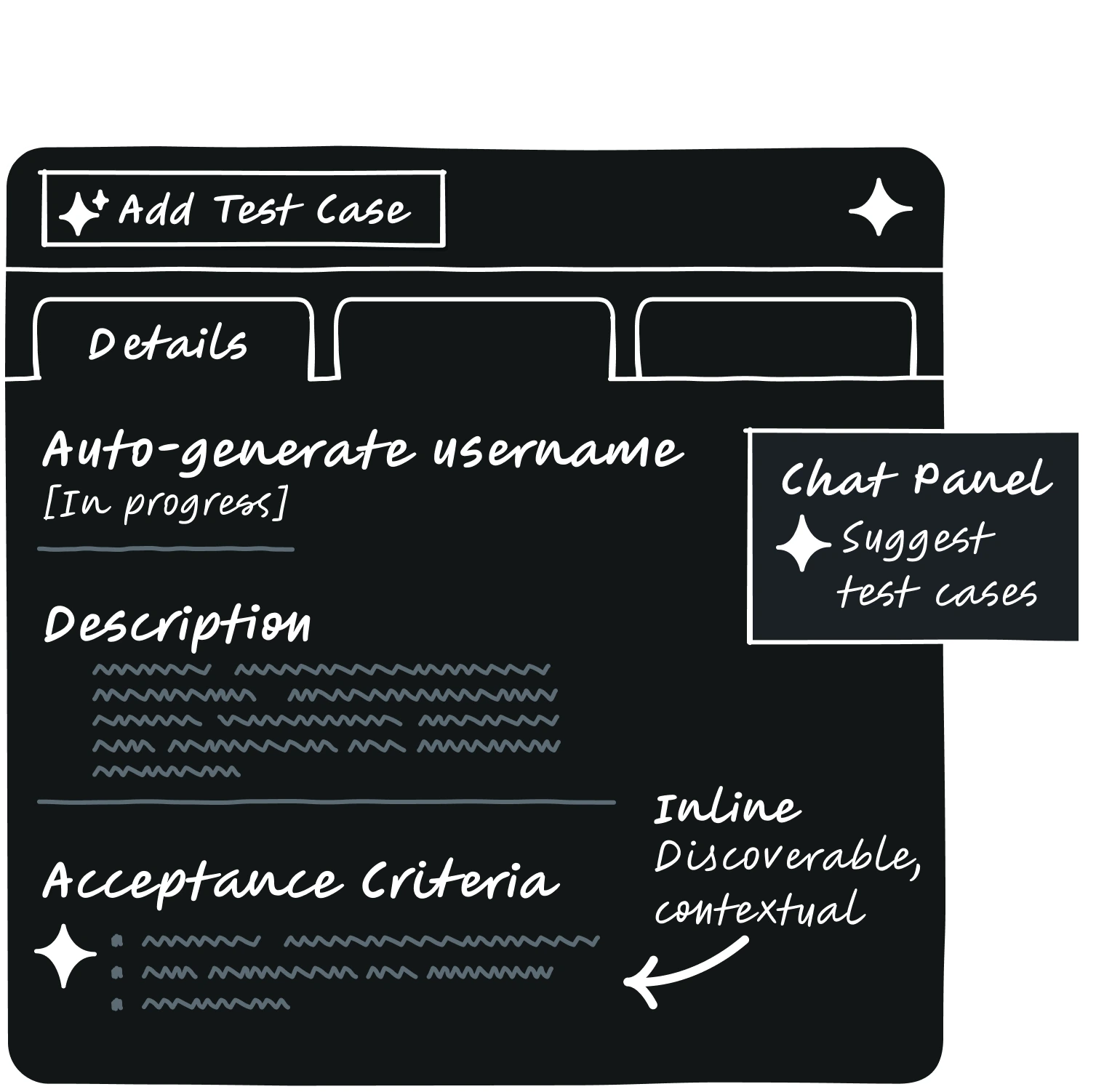

I mapped potential moments where AI could assist during test creation through the lens of discoverability and focus.

The goal was to make assistance feel available yet invisible.

Universal access for power users anywhere in the experience.

Expandable guidance and deeper exploration without blocking the workspace.

Context-aware help near acceptance criteria, aligned to intent.

In early prototypes, assistance appeared through modals and a global chat panel. What seemed flexible in theory proved distracting in practice, breaking user flow as attention fragmented across multiple panels.

I shifted to inline assist bubbles within the editor, keeping guidance visible only when relevant and preserving uninterrupted focus.

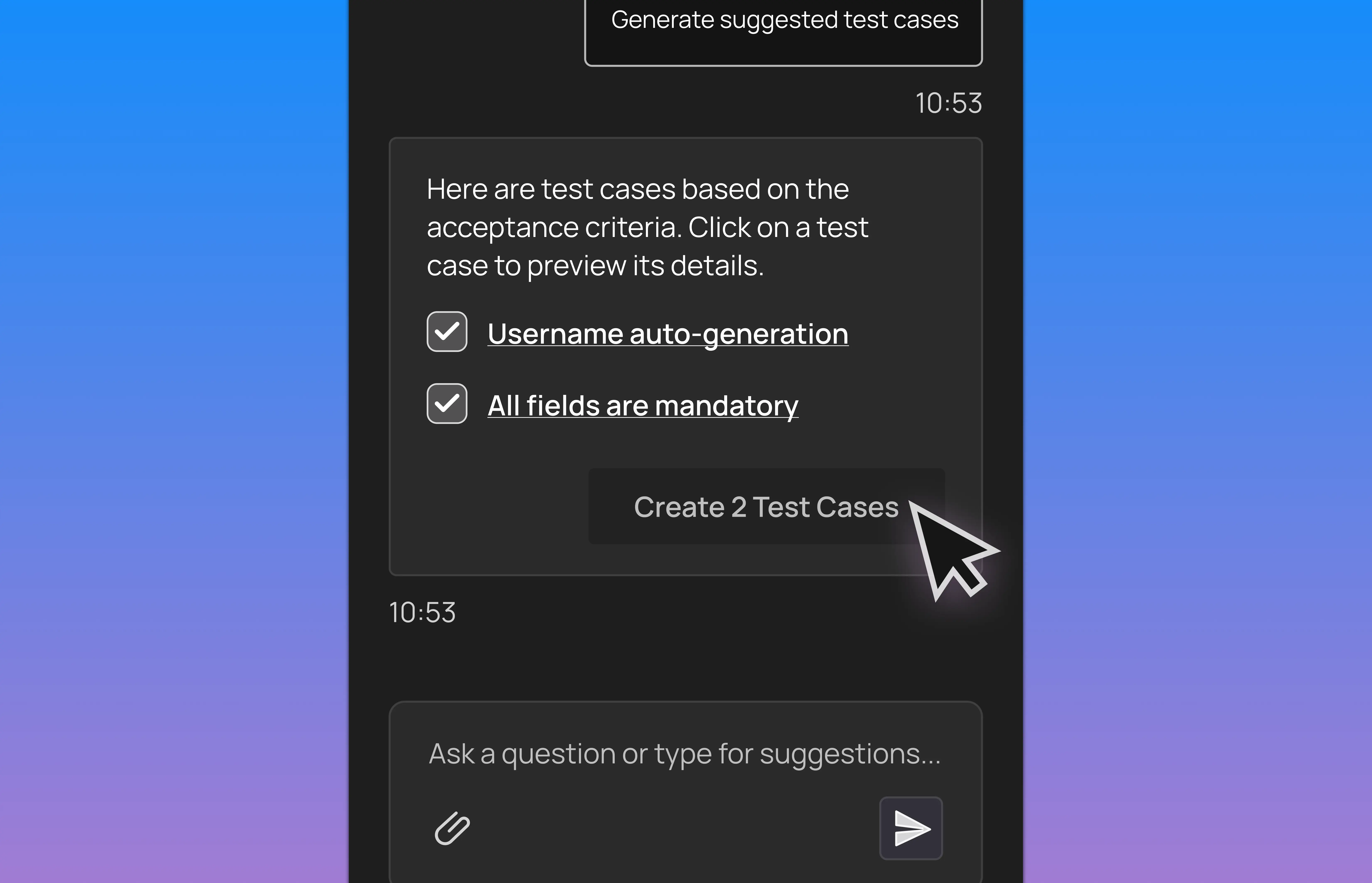

Test case generation took place inside the chat panel, allowing developers to edit and approve results while keeping the workspace in view.

Assistance builds trust when it aligns with attention, not when it competes with it.

This became the foundation for the Progressive Assistance Model (PAM), a framework that adapts AI visibility to human focus.

After realizing that AI visibility needed to align with user attention instead of competing with it, I created the Progressive Assistance Model (PAM), a scalable UX framework that defines when, where, and how AI appears within developer workflows.

After defining the Progressive Assistance Model (PAM), the next step was to make AI feel like a partner rather than a command line.

Each refinement focused on the same issues that sparked the project: fragmented workflows, slow reviews, and a lack of trust.

The goal was simple: turn intelligence into presence and create help that feels human.

The inline icon appears only when a developer hovers over acceptance criteria. A quiet cue that says “I am here if you need me.”

Suggestions appear only after the developer asks for them. The user keeps control and the system does not interrupt.

Developers refine or regenerate test cases in the same panel. No tab switching. No lost focus. Just flow.

Multiple cases can be reviewed and accepted together. Repetition turns into confident, high-speed decisions.

Demo of progressive assistance for test case planning. Inline assist appears near acceptance criteria, the panel opens for generation, then a review pass with edit and add-to-suite. HUD chips show coverage and changes. Coverage animates to show improvement.

To validate its value, I modeled the redesigned flow against the original six-step manual process.

These projections were estimated through modeled task durations informed by internal discussions and observed workflow patterns.

Even with conservative modeling, the redesigned flow turns repetitive work into focused oversight, reducing a 30-minute task to roughly five.

Beyond modeled efficiency gains, I ran dry runs with developers and PMs to validate comprehension and trust.

Developers quickly understood inline assist cues without onboarding.

InsightClear discoverability validated the PAM “inline first” principle, showing that subtle inline cues were self-explanatory and trustworthy.

Stakeholders emphasized contextual triggers felt less intrusive than global prompts.

InsightReinforced that assistance works best when aligned with focus; AI should adapt to attention, not compete for it.

One participant noted the chat panel “felt helpful without interrupting flow”.

InsightConfirmed the perceived value of AI as a partner within flow; intelligence integrated, not imposed.

Designing contextual AI taught me that speed only matters when trust comes first.

By introducing assistance progressively, we built confidence before automation and transformed friction into flow.

The Progressive Assistance Model (PAM) became a reusable pattern I later incorporated into other areas of the ecosystem: